Decoding Functional MRI: Discover MindLLM’s Impact on Neuroscience!

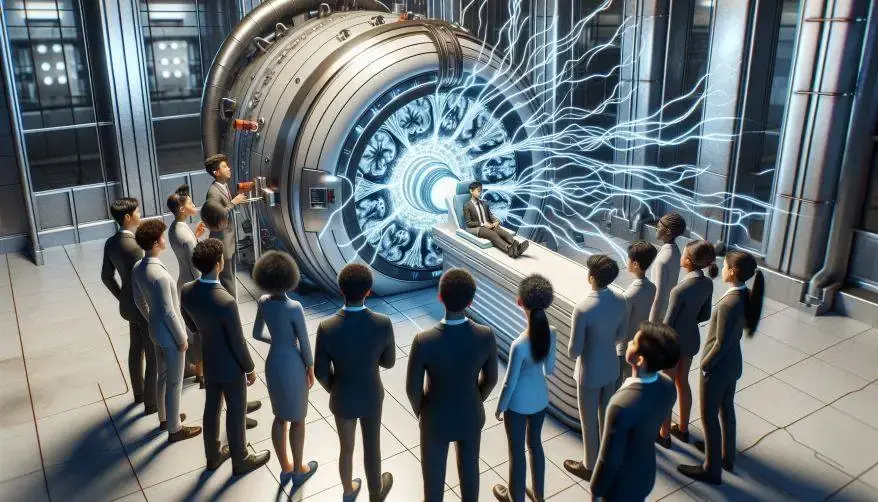

Imagine a world where computers can understand your thoughts directly. It sounds like science fiction, right? Scientists are getting closer to that reality thanks to a groundbreaking new model called MindLLM. This innovative technology translates functional MRI (magnetic resonance imaging) brain scans into actual text, paving the way for exciting advancements in neuroscience and brain-computer interfaces. Want to learn more about this incredible development?

What is MindLLM?

Recently, scientists have made a breakthrough in understanding brain activity through a MindLLM model. This innovative approach aims to improve how we decode functional MRI (magnetic resonance imaging) signals into text. By doing so, it could greatly enhance brain-computer interfaces and help us understand how our brains work at a deeper level. As technology continues to advance, keep your eyes peeled for exciting changes in the realm of neuroscience!

Why is fMRI Important?

The functional MRI (fMRI) technique is crucial because it allows researchers to visualize brain activity by detecting changes in blood flow. This means they can see which parts of the brain are working when we think or feel something. However, existing methods often face challenges like poor accuracy and limited tasks. MindLLM addresses these issues head-on!

How Does MindLLM Work?

MindLLM uses a clever two-part system. First, it takes fMRI data – images of brain activity – and breaks it down into tiny 3D units called voxels. Then, a sophisticated fMRI encoder processes these voxels, considering their position and activity level in the brain. Finally, an off-the-shelf LLM translates this information into coherent text. This process allows MindLLM to interpret a wide range of brain activities beyond simply describing images and delving into complex reasoning and memory retrieval.

Brain Instruction Tuning (BIT)

An exciting feature of MindLLM is called Brain Instruction Tuning (BIT). This new technique enhances the model’s ability to interpret diverse meanings captured from fMRI signals. Consequently, this versatility makes it easier for researchers to gather valuable information from various brain activities.

Impressive Results

The results of recent tests on MindLLM are impressive! It has shown significant improvements in decoding capabilities—up by 12% in downstream tasks, 16% for unseen subjects, and 25% for adapting to new tasks! Moreover, by examining the attention patterns used within MindLLM, scientists can gain interpretative insights that explain how this technology makes its decisions.

The Future of MindLLM and Brain-Computer Interfaces

The implications of MindLLM are truly astounding. Imagine assisting individuals with communication disorders, creating revolutionary brain-computer interfaces, or gaining deeper insights into how the brain works. MindLLM helps us understand the intricate connection between brain activity and thought, unlocking new possibilities in neuroscience and beyond. The researchers are already exploring ways to use MindLLM for real-time functional MRI decoding, opening the door to even more exciting advancements! The ability to interpret open-ended questions and retrieve descriptions of previously seen images makes MindLLM an incredibly versatile tool.

Reference

- Qiu, W., Huang, Z., Hu, H., Feng, A., Yan, Y., & Ying, R. (2025, February 18). MindLLM: a Subject-Agnostic and versatile model for FMRI-to-Text decoding. arXiv.org. https://arxiv.org/abs/2502.15786

Additionally, to stay updated with the latest developments in STEM research, visit ENTECH Online. Basically, this is our digital magazine for science, technology, engineering, and mathematics. Further, at ENTECH Online, you’ll find a wealth of information.

Disclaimer: We do not intend this article/blog post to provide professional, technical, or medical advice. Therefore, please consult a healthcare professional before making any changes to your diet or lifestyle. In fact, we only use AI-generated images for illustration and decoration. Their accuracy, quality, and appropriateness can differ. So, users should avoid making decisions or assumptions based only on the text and images.